The shift and speed toward investment in Generative Artificial Intelligence (AI) has been astounding. Last year saw over $29 billion in total AI venture capital (VC) deal volume, more than the previous five years combined.1 This happened all while the global VC industry broadly has been suffering, with deal volumes down over 50% from 2021 levels and hitting a six year low.2

In the first of a series of notes on the AI landscape, we seek to introduce the main concepts, parse the narratives and identify implications for investment portfolios.

What is it?

Every 10 to 15 years the technology industry goes through a platform shift. From Mainframes to PCs, the World Wide Web, Smartphones and Cloud, each of these shifts availed a new, modern framework on which software firms develop applications to deliver value-added services to enterprises and consumers. Although it’s still very early, Generative AI may be the next platform shift.

Generative AI has its roots in the explosion of the application of Machine Learning which came on the scene with the Cloud platform shift in 2013, giving enterprises the ability to automate repetitive tasks under conditions of uncertainty at scale. The right level of abstraction here is pattern recognition, and for the last decade the industry has tried to identify problems to solve with it.

As computational resources on the Cloud have become increasingly powerful in recent years, data engineers have been able to train pattern recognition models on vast amounts of images, video and all forms of natural language. The latter effort has led to the development of Large Language Models (LLMs), the most well-known of which is ChatGPT, a product of the upstart OpenAI.

LLMs

LLMs are a type of Generative AI program which perform a variety of natural language processing (NLP) tasks. They use statistical means to recognize patterns in text and, by incorporating enough text and model complexity in training, can recognize and produce an enormous amount of smartly written natural language. Classic use cases involve guiding a programmer with code snippets or tips, summarizing entire books and research papers or writing seemingly original copy for the next marketing campaign.

ChatGPT is known to be trained on 570 gigabytes of data, or roughly 300 billion words. In 2022, OpenAI revealed they spent more than $3 million to train ChatGPT-3 on computing resources alone, running across 285,000 processor cores and 10,000 graphics processing units (GPUs).3 In other words, OpenAI is using a super computer to train a virtual assistant on the corpus of all human knowledge.

Hallucinations

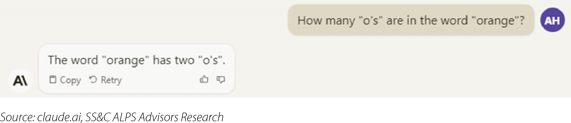

Because LLMs are pattern recognizers, it’s important to remember they are not technically providing a correct answer to a given question in the way our brains distinguish between facts and fantasy. As leading researchers from the Oxford Internet Institute have concluded, “LLMs are designed to produce helpful and convincing responses without any overriding guarantees regarding their accuracy or alignment with fact.”4 While there are model tuning techniques advancing at breakneck speed to attempt to reduce falsity in LLMs5, a key construct of these models is that they are stochastic rather than deterministic. In other words, they intentionally incorporate randomness into their modelling process, rather than provide the same answer for a given set of inputs (which is how database software performs). These false responses are called “hallucinations”, and I’ve provided an example of one below using the chat bot service provided by Anthropic’s Claude v2.1 LLM.

If you stare long enough, you might be able to see two “o’s” as well. Perhaps the huge, generalized LLM is geared more for activities and industries where there is no correct answer, such the arts, media or search.

If you stare long enough, you might be able to see two “o’s” as well. Perhaps the huge, generalized LLM is geared more for activities and industries where there is no correct answer, such the arts, media or search.

Commoditization and Specialization

Another trend in the landscape is the open-sourcing of LLMs. While the first big movers OpenAI and Anthropic are closed models, meaning their model architectures and coefficients are not available for the open-source development community, last year Meta and many others have open-sourced their LLM models. Given the data on which LLMs are trained are public, it’s possible the open-sourcing of these models will commoditize them, requiring their purveyors to continue to develop value-added services, with sustainable pricing power, to sit on top of their LLMs.

In fact, much of the venture investment has started to move into verticalized implementations of Generative AI, where datasets are smaller and industry-specific. Given the lower volume of the data, these models are less costly to train, more easily tuned and return value to the business faster.1 This specialization of Generative AI looks promising and may lead the way in a platform shift.

The Hyperscalers are All In

Publicly traded companies involved in Generative AI have been the major market narrative. Most of the focus has been on chipmakers like Nvidia who have doubled their year-over-year revenue on the demand surge for their powerful GPU processors, as well as cloud infrastructure providers such as Alphabet, Meta and Amazon, also known as the hyperscalers. Curiously, these four publicly traded firms were in 85% of the VC deal volume in AI last year.6

The interesting part about these transactions is that reportedly most of the investment the hyperscalers are making into LLM companies are in “credits” for their cloud infrastructure.7 In other words, the “investment” is immediate revenue recognition on their books with little to no transparency on the original pricing of these credits and how they’ve evolved over time. The market loves this, and as owners of all of these companies so do we. But we’re growing cautious of the potential for these transactions to create distortions in pricing around this market theme at a time when the capital cycle is well under way. We’ve seen this movie before.

Important Disclosures & Definitions

1 Hodgson, L., ‘VCs go vertical in backing specialized AI’, Pitchbook, January 29, 2024.

2 Stanford, K., ‘Final data for 2023 illustrates the extent of VC’s tough year’, Pitchbook, January 6, 2024.

3 Javaji, S., ‘ChatGPT – What? Why? And How?’, Microsoft Educator Developer Blog, April 24, 2023.

4 Mittelstadt, B., Wachter, S. & Russell, C., 'To protect science, we must use LLMs as zero-shot translators', Nature Human Behaviour, November 20, 2023.

5 Zhao, W. X., Zhou, K., Li, J., Tang, T., Wang, X., Hou, Y., ... & Wen, J. R., 'A survey of large language models', arXiv (arXiv:2303.18223v13).

6 Agrawal, A., ‘New VC in town: “MANG”’, Apporv’s Notes, January 18, 2024.

7 Albergotti, R., ‘OpenAI has received just a fraction of Microsoft’s $10 billion investment’, Semafor, November 18, 2023.

AAI000635 03/05/2025